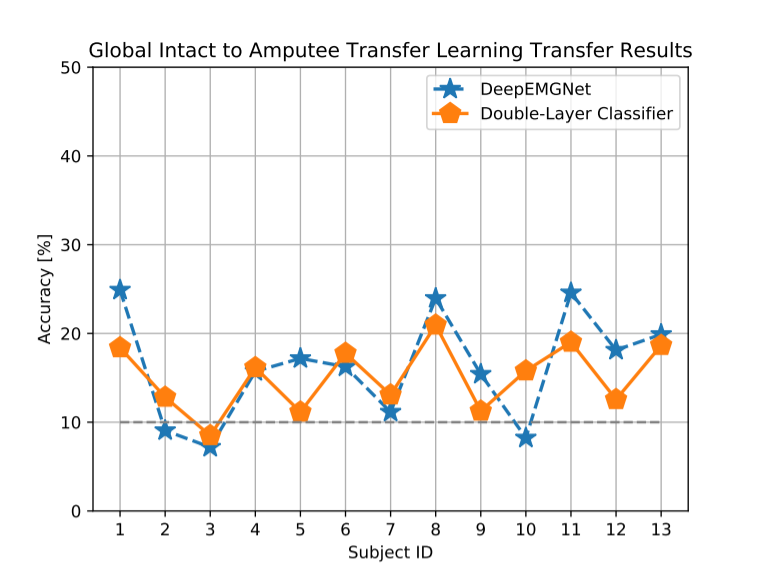

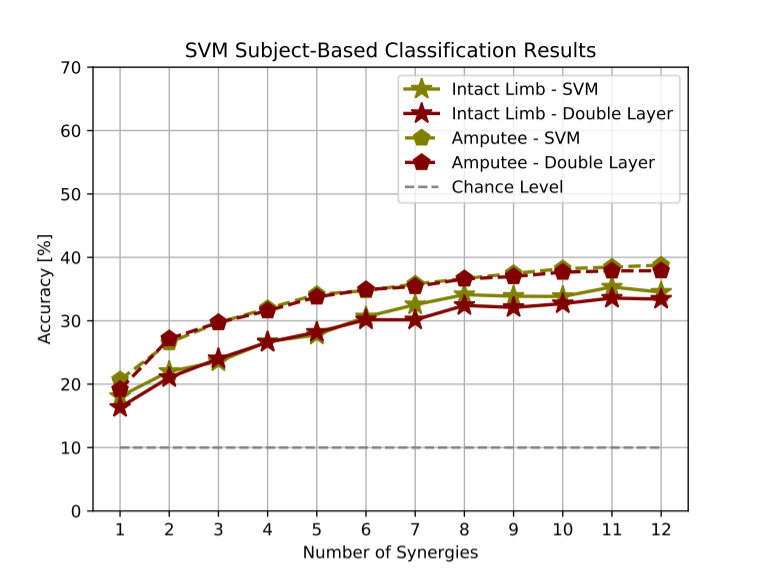

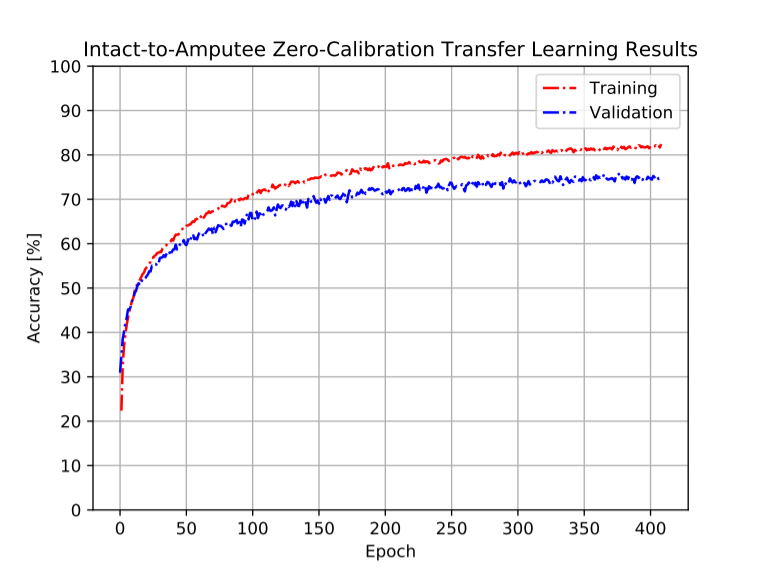

We propose a novel convolutional neural network encoder architecture, DeepEMGNet, that is built to extract subject-invariant, transferable representations from EMG signals. We learn an EMG feature extractor for hand gesture recognition using intact limb participants' data, to predict attempted hand gesture movement types from EMG recordings of amputee patients. Furthermore, we also present a traditional machine learning approach, a double-layer muscle synergy driven classification protocol, to compare DeepEMGNet on subject-specific classification and transfer learning problems. Our results, evaluated on a public dataset with a rich hand gesture selection, support that our novel focus on the intact-to-amputee transfer learning concept shows significant promise for multi-class EMG-based gesture recognition.